1. Model Introduction

Latent Dirichlet Allocation is a three-layer Bayesian model (document layer, topic layer, feature words layer). Each layer has random variables or parameter control correspondingly. The basic idea is that the text is randomly mixed and generated from implicit topics, and each topic corresponds to a specific feature word distribution. The LDA model assumes that all documents have multiple hidden topics. To generate a document, first generate a topic distribution of the document, and then generate a collection of words. To generate a word, a topic needs to be randomly selected according to the topic distribution of the document. Then a word is randomly selected according to the distribution of words of the topic, and this process is repeated until the document is generated. Confirm the final topic distribution with the distribution parameters.

2. R&D Bases

(1) South China University of Technology.The Key Laboratory of Guangdong New Media and Brand Communication Innovation. An Empirical Study on Brand Image Communication and Thematic Model Calculation of “One Belt One Road” Countries Based on LDA. 2017.

(2) Jason Hou-Liu.Benchmarking and Improving Recovery of Number of Topics in Latent Dirichlet Allocation Models.2018,4.

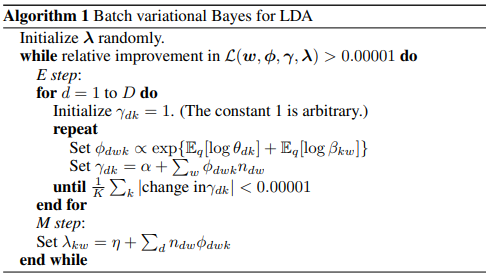

3. Algorithm Description

A three-layer Bayesian generative model is composed of documents, topics, and words. Each document contains topics with a polynomial distribution, and each topic contains words with a polynomial distribution.

4. Applicable scenarios

(1) Overview of the topics of the text, suitable for topic classification.

(2) Finding similar documents, content recommendation and topics ranking based on regression analysis.